Brief Introduction

Vector space model or term vector model is an algebraic model for representing text documents (and any objects, in general) as vectors of identifiers. The representation of a set of documents as vectors in a common vector space is known as the vector space model. It is fundamental to a host of information retrieval operations ranging from scoring documents on a query, document classification and document clustering. It is used in information filtering, information retrieval, indexing and relevancy rankings.

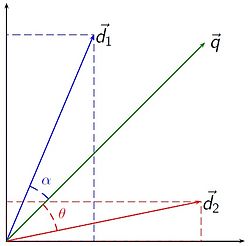

Documents and queries are represented as vectors. Each dimension in the vectors corresponds to separate terms in the query. If the term in query appears in the document, then the corresponding value in the vector will be non-zero and zero if it doesn’t appear in the document. Among many different ways to calculate those values, tf-idf weighting is one of the most popular ones.

In information retrieval, tf–idf, short for term frequency–inverse document frequency, is a numerical statistic that is intended to reflect how important a word is to a document in a collection or corpus. It is often used as a weighting factor in information retrieval and text mining. The tf-idf value increases proportionally to the number of times a word appears in the document, but is offset by the frequency of the word in the corpus, which helps to adjust for the fact that some words appear more frequently in general.

In simple words, a word is given more importance if it appears abundantly in a document while the same word loses its importance if it appears more frequently in the corpus (collection of documents). Thus a word with rare appearances in the corpus bears more relevance to a specific document if it appears in that document than some other word which appears a lot in the document while appearing equally or more abundantly in the corpus. For an instance, “the handsome tourist” contains three different terms “the”, “handsome”, and “tourist”. If we to only account term frequency for similarity measure, “the” would clearly overshadow other two words. “The” appears many number of times but doesn’t bear any substantial importance. While, “handsome” and “tourist” are the words that are more important for our purpose. Inverse document frequency (idf) takes care of that.

Term Frequency

The number of times a term occurs in a document is called its term frequency.

Inverse Document Frequency

It is the logarithmically scaled inverse fraction of the documents that contain the word. It checks whether the term is common or rare across all documents in the corpus.

idfterm = log10(total number of documents/Number of documents the term appears in)

To know how to calculate tf-idf and cosine similarities, click here.